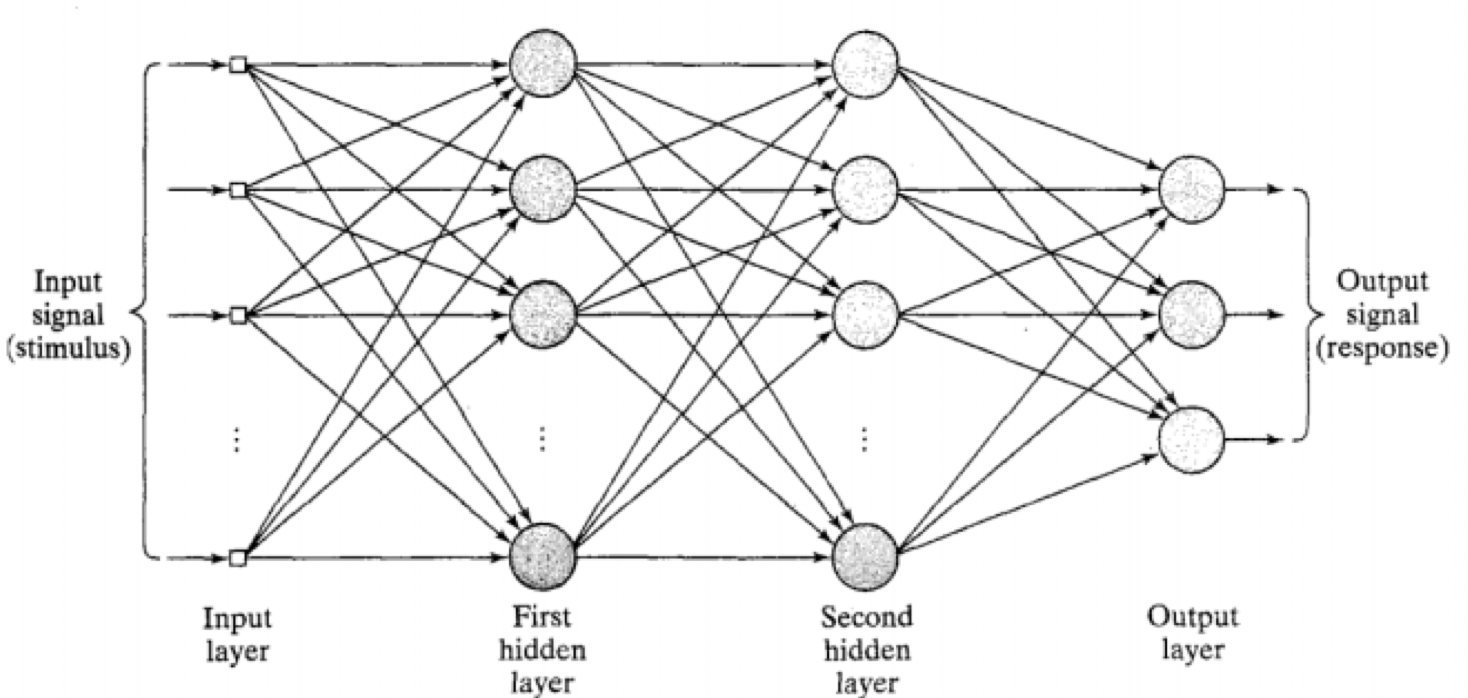

MLP

- Feedforward

- Single hidden layer can learn any function

- Universal approximation theorem

- Each hidden layer can operate as a different feature extraction layer

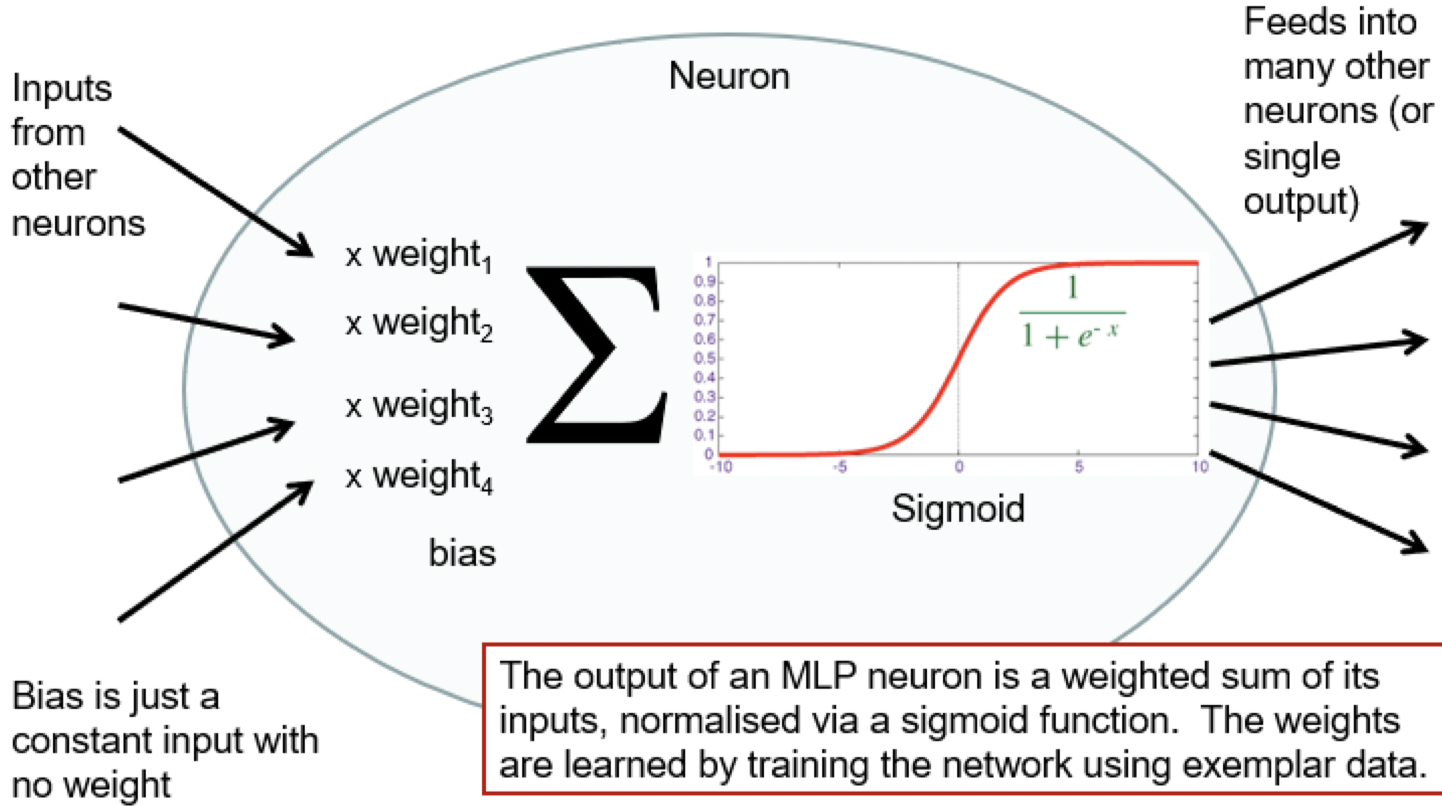

- Lots of weights to learn

- Back-Propagation is supervised

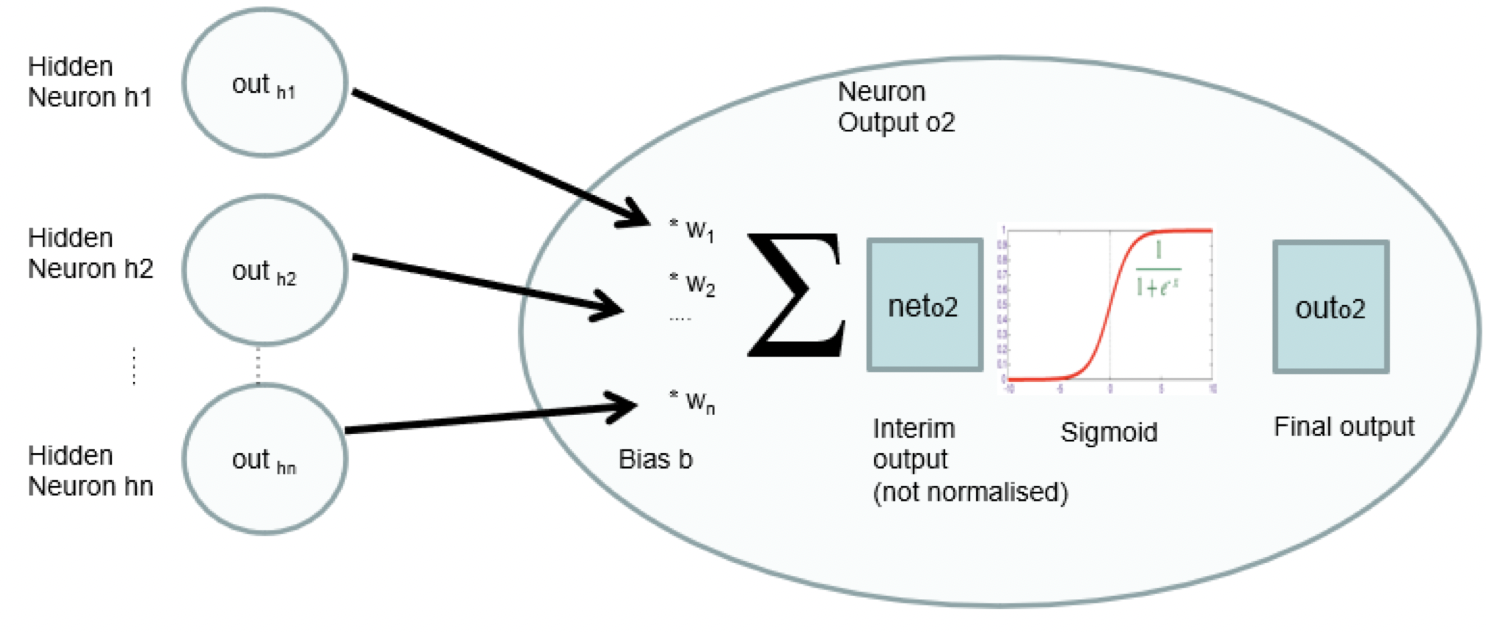

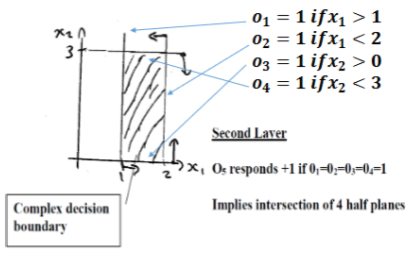

Universal Approximation Theory

A finite feedforward MLP with 1 hidden layer can in theory approximate any mathematical function

- In practice not trainable with BP

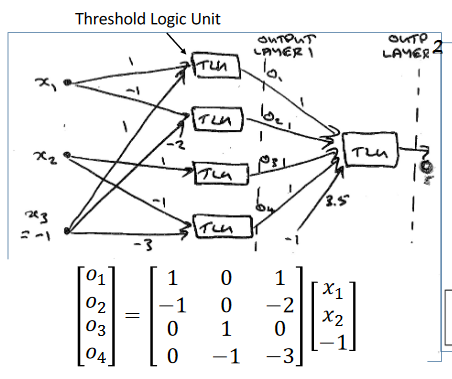

Weight Matrix

- Use matrix multiplication for layer output

- TLU is hard limiter

- to must all be one to overcome -3.5 bias and force output to 1

- Can generate a non-linear decision boundary