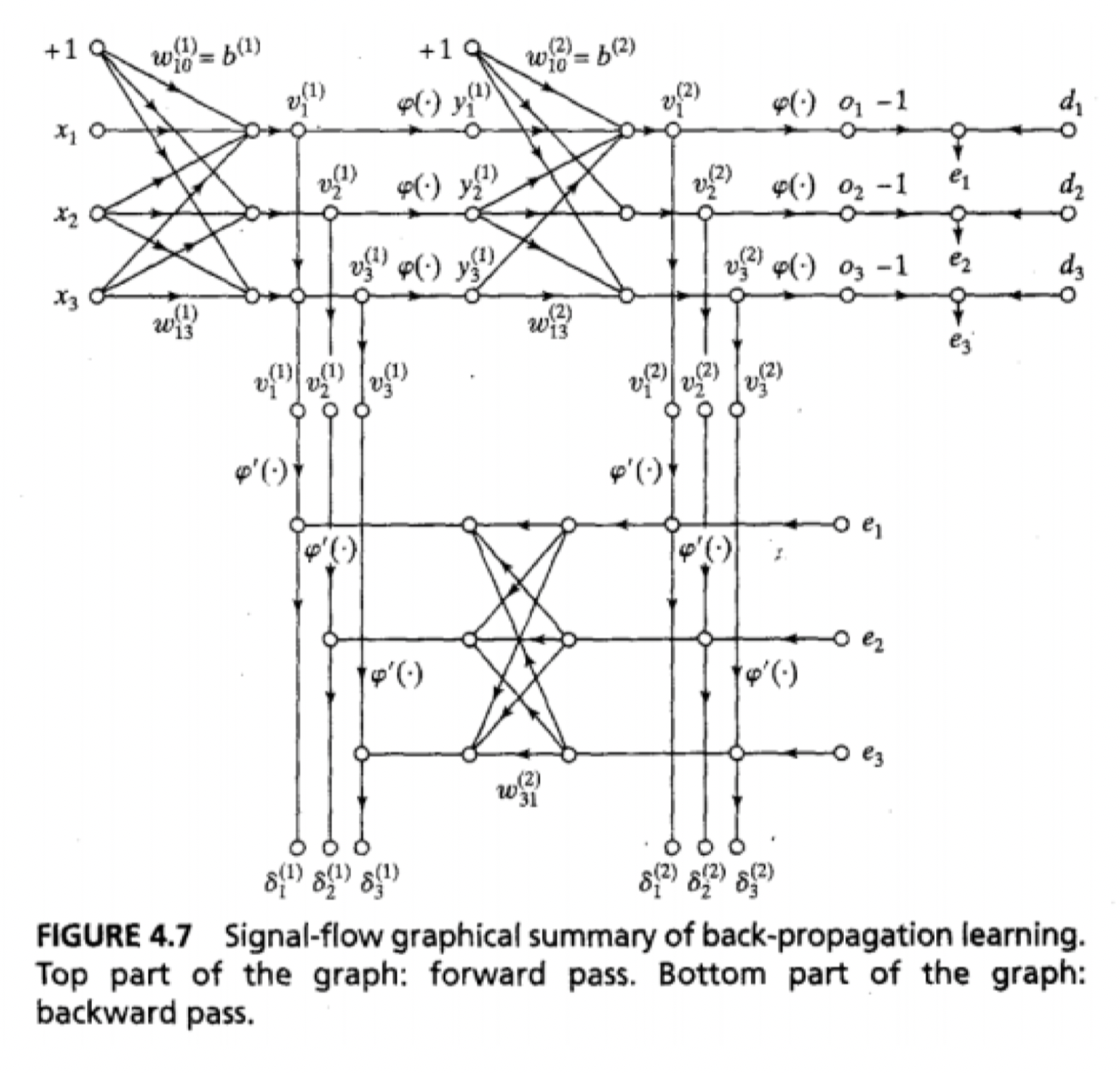

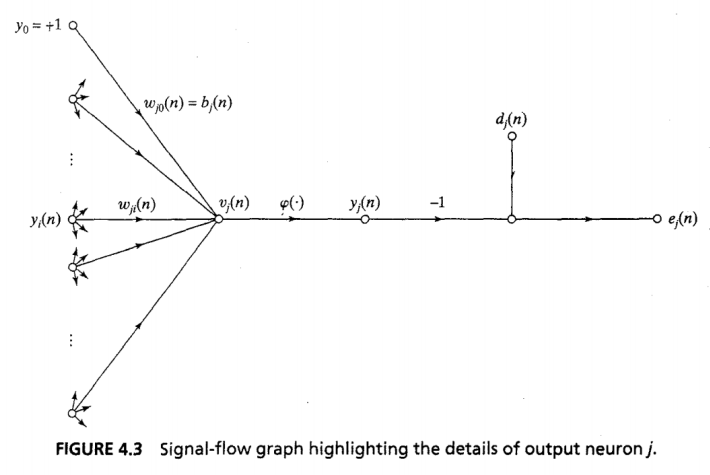

Error signal graph

- Error Signal

- Net Internal Sum

- Output

- Instantaneous Sum of Squared Errors

- = o/p layer nodes

- Average Squared Error

From 4

From 1

From 3 (note prime)

From 2

Composite

Gradients

Output Local

Hidden Local

Weight Correction

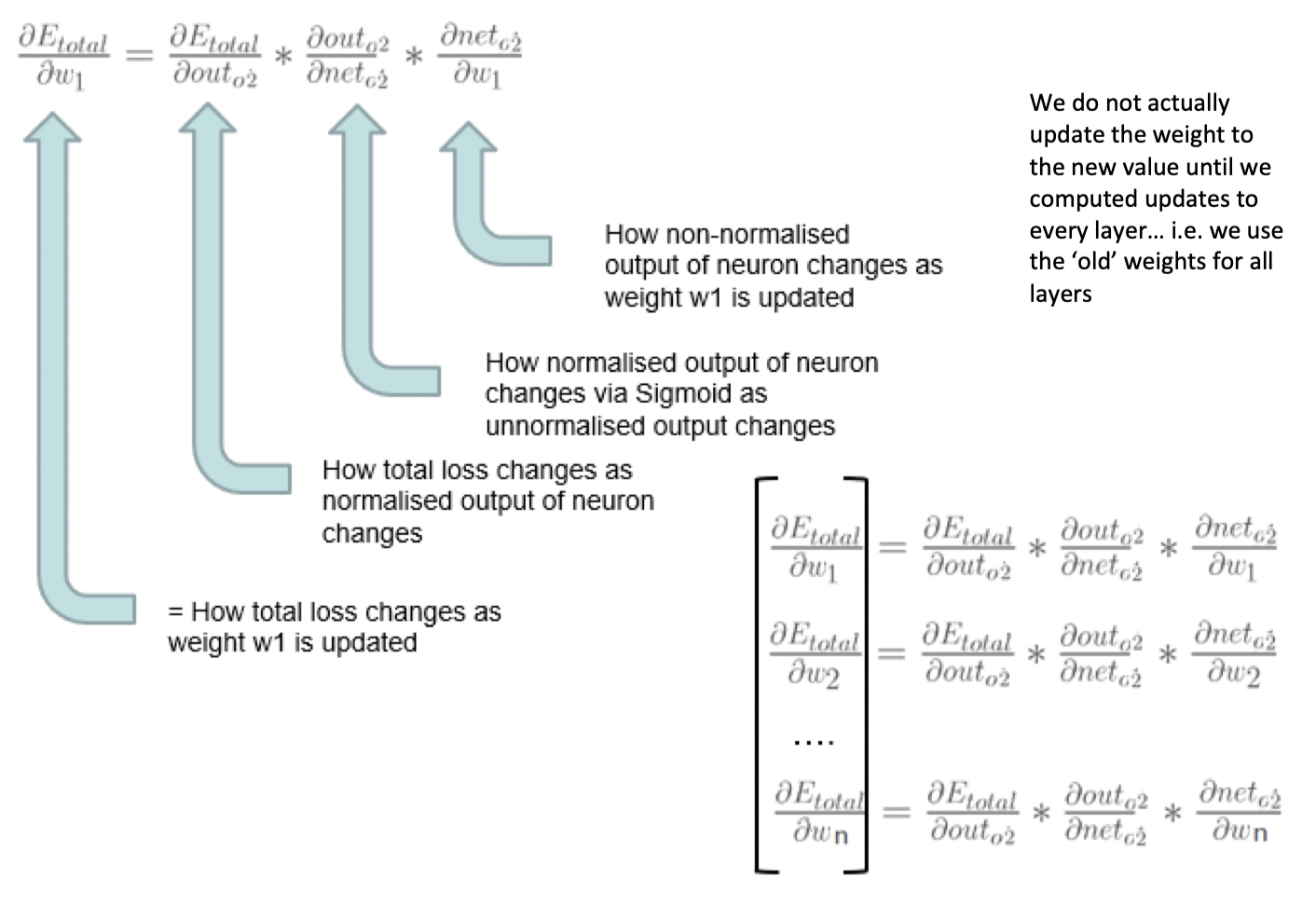

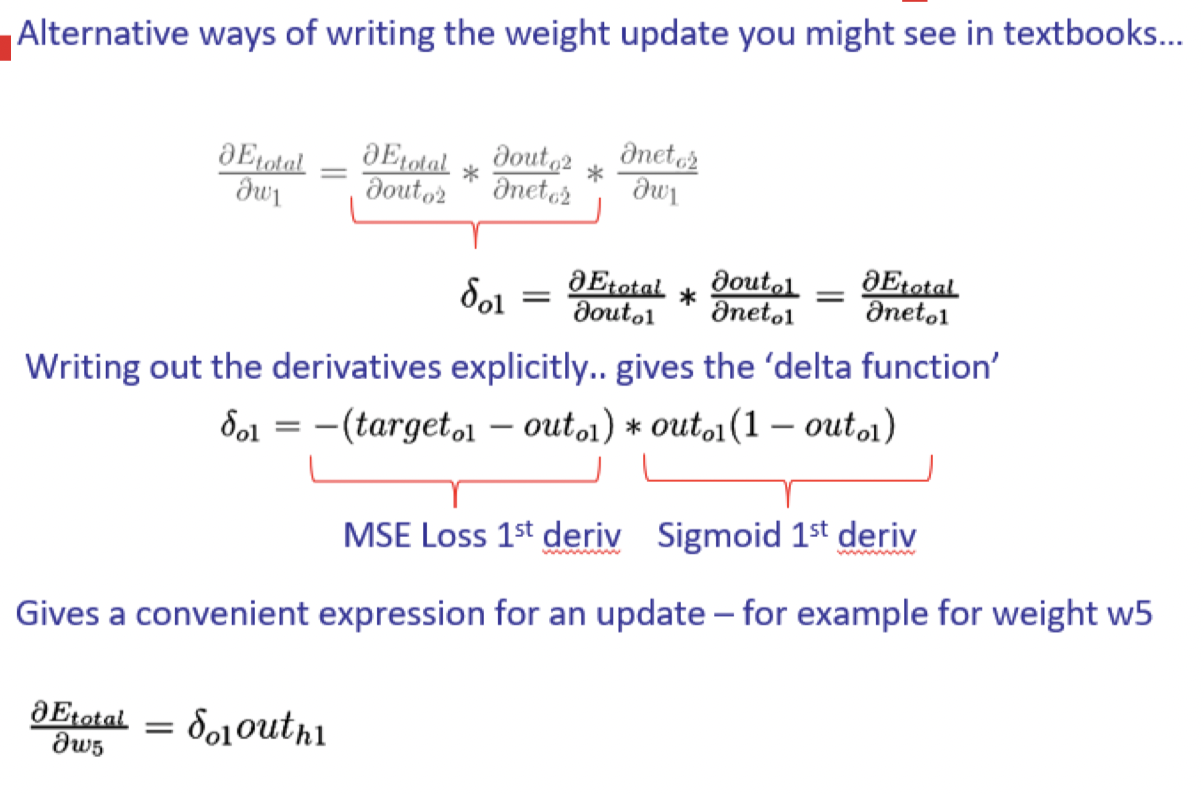

- Looking for partial derivative of error with respect to each weight

- 4 partial derivatives

- Sum of squared errors WRT error in one output node

- Error WRT output

- Output WRT Pre-activation function sum

- Pre-activation function sum WRT weight

- Other weights constant, goes to zero

- Leaves just

- Collect 3 boxed terms as delta

- Local gradient

- Weight correction can be too slow raw

- Gets stuck

- Add momentum

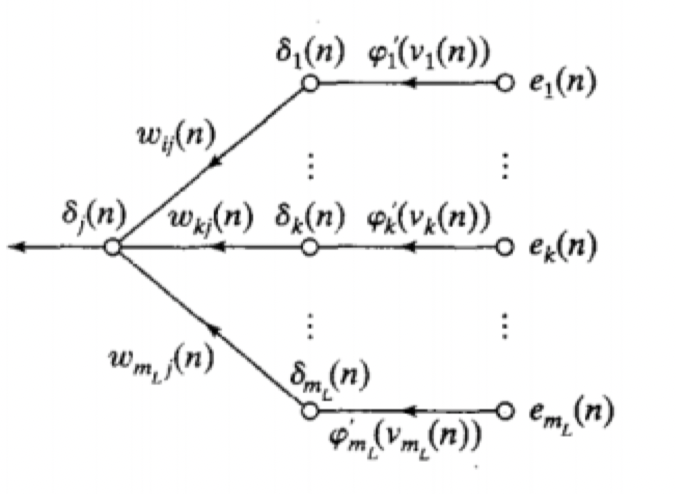

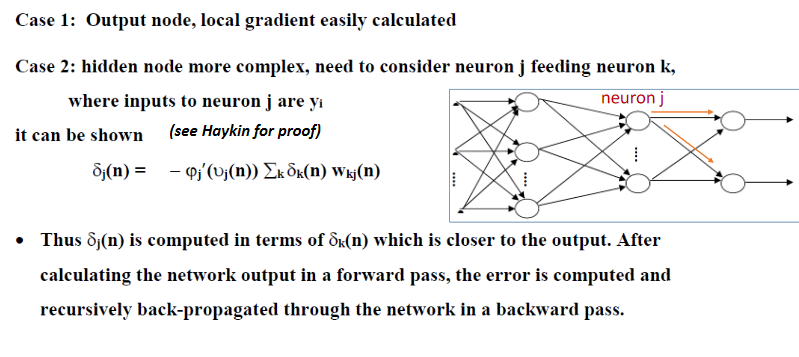

- Nodes further back

- More complicated

- Sum of later local gradients multiplied by backward weight (orange)

- Multiplied by differential of activation function at node

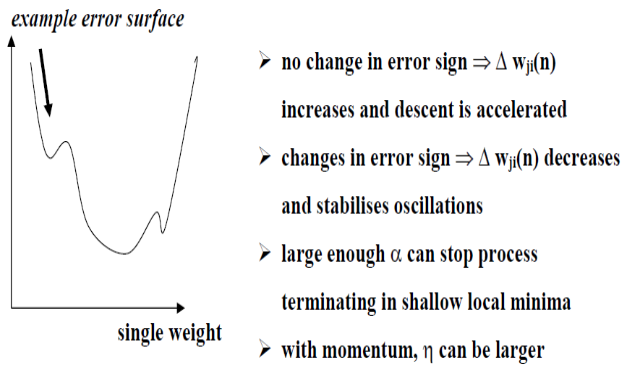

Global Minimum

- Much more complex error surface than least-means-squared

- No guarantees of convergence

- Non-linear optimisation

- Momentum

- Proportional to the change in weights last iteration

- Can shoot past local minima if descending quickly