Error-Correcting Perceptron Learning

- Uses a McCulloch-Pitt neuron

- One with a hard limiter

- Unity increment

- Learning rate of 1

If the -th member of the training set, , is correctly classified by the weight vector computed at the -th iteration of the algorithm, no correction is made to the weight vector of the perceptron in accordance with the rule:

Otherwise, the weight vector of the perceptron is updated in accordance with the rule

- Initialisation. Set . perform the following computations for

time step - Activation. At time step , activate the perceptron by applying continuous-valued input vector and desired response .

- Computation of Actual Response. Compute the actual response of the perceptron:

where is the signum function. - Adaptation of Weight Vector. Update the weight vector of the perceptron:

where - Continuation. Increment time step by one and go back to step 2.

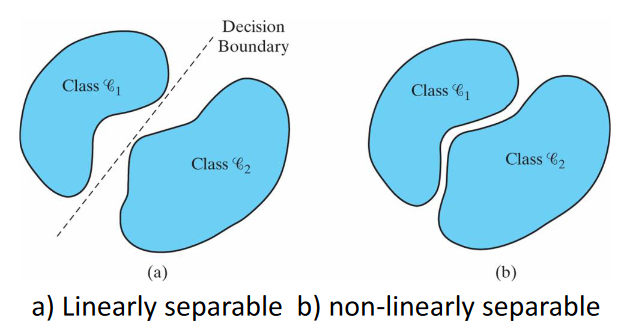

- Guarantees convergence provided

- Patterns are linearly separable

- Non-overlapping classes

- Linear separation boundary

- Learning rate not too high

- Patterns are linearly separable

- Two conflicting requirements

- Averaging of past inputs to provide stable weight estimates

- Small eta

- Fast adaptation with respect to real changes in the underlying distribution of process responsible for

- Large eta

- Averaging of past inputs to provide stable weight estimates